Most conspiracy theories don't make sense nor withstand any scrutiny. They usually involve operations so immense that it's basically impossible for them to be kept secret, and all the proof given by conspiracy theorists usually have a very simple explanation (usually much simpler than the explanation given by the theorists).

Yet conspiracy theories are very popular and appealing. Even when they don't make sense and there's just no proof, many people still believe them. Why?

One big reason for this is that some conspiracy theorists are clever. They use psychology to make their theories sound more plausible. They appeal to certain psychological phenomena which make people to tend to believe them. However, these psychological tricks are nothing more than logical fallacies. They are simply so well disguised that many people can't see them for what they are.

Here are some typical logical fallacies used by conspiracy theorists:

The so-called "bandwagon effect" is a psychological phenomenon where people are eager to believe things if most of the people around them believe that too. Sometimes that thing is true and there's no harm, but sometimes it's a misconception, urban legend or, in this case, an unfounded conspiracy theory, in which case the "bandwagon effect" bypasses logical thinking for the worse.

The most typical form of appealing to the bandwagon effect is to say something along the lines of "30% of Americans doubt that..." or "30% of Americans don't believe the official story". This is also called an argumentum ad populum, which is a logical fallacy.

Of course that kind of sentence in the beginning of a conspiracy theory doesn't make any sense. It doesn't prove anything relevant. It's not like the theory becomes more true if more people believe in it.

Also the percentage itself is always very dubious. It may be completely fabricated or exaggerated by interpreting the poll results conveniently (eg. one easy way for bumping up the percentage is to interpret all people who didn't answer or who didn't know what to say as "doubting the official story"). Even if it was a completely genuine number, it would still not be proof of anything else than that there's a certain amount of gullible people in the world.

That kind of sentence is not proof of anything, yet it's one of the most used sentences in conspiracy theories. It tries to appeal to the bandwagon effect. It's effectively saying: "Already this many people doubt the official story, and the numbers are increasing. Are you going to be left alone believing the official story?"

Conspiracy theories in general, and the "n% of people doubt the story" claims in particular, also appeal to a sense of rebellion in people.

As Wikipedia puts it, "a rebellion is, in the most general sense, a refusal to accept authority."

People don't want to be sheep who are patronized by authority and told what they have to do and how they have to think. People usually distrust authorities and many believe that authorities are selfish and abuse people for their own benefit. This is an extremely fertile ground for conspiracy theories.

This is so ingrained in people that a sentence like "the official story" has basically become a synonym for "a coverup/lie". Whenever "the official story" is mentioned, it immediately makes people think that it's some kind of coverup, something not true.

Conspiracy theorists are masters at abusing this psyhcological phenomenon for their advantage. They basically insinuate that "if you believe the official story then you are gullible because you are being lied to". They want to make it feel that doubting the original story is a sign of intelligence and logical thinking. However, believing a conspiracy theory usually shows, quite ironically, a great lack of logical thinking.

This is an actual quote from a JFK assassination conspiracy theory website. It's almost as hilarious as it is contradictory:

In the end, you have to decide for yourself what to believe. But don't just believe what the U.S. Government tells you!

(In other words, believe anything you want except the official story!)

"Shotgun argumentation" is a metaphor from real life: It's much easier to hunt a rabbit with a shotgun than with a rifle. This is because a rifle only fires one bullet and there's a high probability of a miss. A shotgun, however, fires tens or even hundreds of small pellets, and the probability of at least one of them hitting the rabbit is quite high.

Shotgun argumentation has the same basic idea: The more small arguments or "evidence" you present in favor of some claim, the higher the probability that someone will believe you regarldess of how ridiculous those arguments are. There are two reasons for this:

Firstly, just the sheer amount of arguments or "evidence" may be enough to convince someone that something strange is going on. The idea is basically: "There is this much evidence against the official story, there must be something wrong with it." One or two pieces of "evidence" may not be enough to convince anyone, but collect a set of a couple of hundreds of pieces of "evidence" and it immediately starts being more believable.

Of course the fallacy here is that the amount of "evidence" is in no way proof of anything. The vast majority, and usually all of this "evidence" is easily explainable and just patently false. There may be a few points which may be more difficult to explain, but they alone wouldn't be so convincing.

Secondly, and more closely related to the shotgun methapor: The more arguments or individual pieces of "evidence" you have, the higher the probability that at least some of them will convince someone. Someone might not get convinced by most of the arguments, but among them there may be one or a few which sounds so plausible to him that he is then convinced. Thus one or a few of the "pellets" hit the "rabbit" and killed it: Mission accomplished.

I have a concrete example of this: I had a friend who is academically educated, a MSc, and doing research work (relating to computer science) at a university. He is rational, intelligent and well-educated.

Yet still this person, at least some years ago, completely believed the Moon hoax theory. Why? He said to me quite explicitly that there was one thing that convinced him: The flag moving after it had been planted on the ground.

One of the pellets had hit the rabbit and killed it. The shotgun argumentation had been successful.

If even highly-educated academic people can fall for such "evidence" (which is easily explained), how more easily are more "regular" people going to believe the sheer amount of them? Sadly, quite a lot more easily.

Most conspiracy theorists continue to present the same old tired arguments which are very easy to prove wrong. They need all those arguments, no matter how ridiculous, for their shotgun argumentation tactics to work.

A "straw man argument" is the process of taking an argument of the opponent, distorting it or taking it out of context so that it basically changes meaning, and then ridiculing it in order to make the opponent look bad.

For example, a conspiracy theorist may say something like: "Sceptics argue that stars are too faint to see in space (which is why there are no stars in photographs), yet astronauts said that they could see stars."

This is a perfect example of a straw man argument. That's taking an argument completely out of context and changing its meaning.

It's actually a bit unfortunate that many debunking sites use the sentence "the stars are too faint to be seen" when explaining the lack of stars in photographs. That sentence, while in its context not false, is confusing and misleading. It's trying to put in simple words a more technical explanation (which usually follows). Unfortunately, it's too simplistic and good material for straw man arguments. I wish debunkers stopped using simplistic sentences like that one.

(The real explanation for the lacking stars is, of course, related to the exposure time and shutter aperture of the cameras, which were set to photograph the Moon surface illuminated by direct sunlight. The stars are not bright enough for such short exposure times. If the cameras had been set up to photograph the stars, the lunar surface would have been completely overexposed. This is basic photography.)

There's a very common bad habit among the majority of people: They believe that credible sources have said/written whatever someone claims they have said or written. Even worse, most people believe that a source is credible or even exists just because someone claims that it is credible and exists. People almost never check that the source exists, that it's a credible source and that it has indeed said what was claimed.

Conspiracy theorists know this and thus abuse it to the maximum. Sometimes they fabricate sources or stories, and sometimes they just cite nameless sources (using expressions like "experts in the field", "most astronomers", etc).

This is an actual quote from the same JFK assassination conspiracy theory website as earlier:

Scientists examined the Zapruder film. They found that, while most of it looks completely genuine, some of the images are impossible. They violate the laws of physics. They could not have come from Zapruder's home movie camera.

Needless to say, the web page does not give any references or sources, or any other indication of who these unnamed "scientists" might be or what their credentials are. (My personal guess is that whenever the website uses the word "scientist" or "researcher", it refers to other conspiracy theorists who have no actual education and competence on the required fields of science, and who are, like all such conspiracy theorists, just seeing what they want to see.)

A common tactic of conspiracy theorists is to take statements by credible persons or newspaper articles which support the conspiracy theory and present these statements or articles as if they were the truth. If a later article in the same newspaper corrects the mistake in the earlier article or if the person who made the statement later says that he was wrong or quoted out of context (ie. he didn't mean what people thought he was meaning), conspiracy theorists happily ignore them.

Since people seldom check the sources, they will believe that the statement or newspaper article is the only thing that person or newspaper has said about the subject.

This is closely related to (and often overlaps with) the concept of quote mining (which is the practice of carefully selecting small quotes, which are often taken completely out of context, from a vast selection of material, in such a way that these individual quotes seem to support the conspiracy theory).

Sometimes that source is not credible (because it's just another conspiracy theorist) but people have little means of knowing this.

Cherry-picking is more a deliberate act of deception than a logical fallacy, but nevertheless an extremely common tactic.

Cherry-picking happens when someone deliberately selects from a wide variety of material only those items which support the conspiracy theory, while ignoring and discarding those which don't. When this carefully chosen selection of material is then presented as a whole, it easily misleads people into thinking that the conspiracy theory is supported by evidence.

This is an especially popular tactic for the 9/11 conspiracy theorists: They will only choose those published photographs which support their claims, while outright ignoring those which don't. The Loose Change "documentary" is quite infamous for doing this, and pulling it out rather convincingly.

The major problem with this is, of course, that it's pure deception: The viewer is intentionally given only carefully selected material, while leaving out the parts which would contradict the conspiracy theory. This is a deliberate act. The conspiracy theorists cannot claim honesty while doing clear cherry-picking.

Just one example: There's a big electrical transformer box outside the Pentagon which was badly damaged by the plane before it hit the building. It's impossible for that box to get that damage if the building was hit by a missile, as claimed by conspiracy theorists (the missile would have exploded when hitting the box, several tens of meters away from the building). Conspiracy theorists will usually avoid using any photographs which show the damaged transformer box because it contradicts their theory. They are doing this deliberately. They cannot claim honesty while doing this.

Scientists are human, and thus imperfect and fallible. Individual scientists can be dead wrong, make the wrong claims and even be deceived into believing falsities. Being a scientist does not give a human being some kind of magic power to resist all deceptions and delusions, to see through all tricks and fallacies and to always know the truth and discard what is false.

But science does not stand on individual scientists, for this exact reason. This is precisely why the scientific process requires so-called peer reviews. One scientist can be wrong, ten scientists can be wrong, and even a hundred scientists can be wrong, but when their claims are peer-reviewed and studied by the whole scientific community, the likelihood of the falsities not being caught decreases dramatically. It's very likely that someone somewhere is going to object and to raise questions if there's something wrong with a claim, and this will raise the consciousness of the whole community. Either the objections are dealt with and explained, or the credibility of the claim gets compromised. A claim does not become accepted by the scientific community unless it passes the peer reviewing test. And this is why science works. It does not rely on individuals, but on the whole.

Sometimes some individual scientists can be deceived into believing a conspiracy theory. As said, scientists do not have any magical force that keeps them from being deceived. Due to their education the likelihood might be slightly lower than with the average person, but in no way is it completely removed. Scientists can and do get deceived by falsities.

Thus sometimes the conspiracy theorists will convince some PhD or other such person of high education and/or high authority, and if this person becomes vocal enough, the conspiracy theorists will then use him as an argument pro the conspiracy. It can be rather convincing if conspiracy theorists can say "numerous scientists agree that the official explanation cannot be true, including (insert some names here)".

However, this is a fallacy named argument from authority. Just because a PhD makes a claim doesn't make it true. Even if a hundred PhD's make that claim. It doesn't even make it any more credible.

As said, individual scientists can get deceived and deluded. However, as long as their claims do not pass the peer review process, their claims are worth nothing from a scientific point of view.

In this fallacy the word "ignorance" is not an insult, but refers to the meaning of "not knowing something".

Simply put, argument from ignorance happens when something with no apparent explanation is pointed out (for example in a photograph), and since there's no explanation, it's presented as evidence of foul play (eg. that the photograph has been manipulated).

This can be seen as somewhat related to cherry-picking: The conspiracy theorist will point out something in the source material or the accounts of the original event which is not easy to immediately explain. A viewer with no experience nor expertise on the subject matter might be unable to come up with an explanation, or to identify the artifact/phenomenon. The conspiracy theorist then abuses this to claim that the unexplained artifact or phenomenon is evidence of fakery or deception.

Of course this is a fallacy. Nothing can be deduced from an unexplained phenomenon or artifact. As long as you don't know what it is, you can't take it as evidence of anything.

(In most cases such things have a quite simple and logical explanation; it's just that in order to figure it out, you need to have the proper experience on the subject, or alternatively to have someone with experience explain it to you. After that it becomes quite self-evident. It's a bit like a magic trick: When you see it, you can't explain how it works, but when someone explains it to you, it often is outright disappointingly simple.)

It might sound rather self-evident when explained like this, but people still get fooled in an actual situation.

In its most basic and bare-bones from, argument from incredulity takes the form of "I can't even begin to imagine how this can work / be possible, hence it must be fake". This is a variation or subset of the argument from ignorance. Of course conspiracy theorists don't state the argument so blatantly, but use much subtler expressions.

Example: Some (although not all) Moon Landing Hoax conspiracy theorists state that the Moon Lander could have not taken off from the surface of the Moon, because a rocket on its bottom side would have made it rotate wildly and randomly. In essence what the conspiracy theorist is saying is "I don't understand how rocketry can work, hence this must be fake", and trying to convince the reader of the same.

The problem of basic rocketry (ie. how a rocket with a propulsion system at its back end can maintain stability and fly straight) is indeed quite a complex and difficult one (which is where the colloquial term "rocket science", meaning something extremely complicated and difficult, comes from), but it was solved in the 1920's and 30's. This isn't even something you have to understand or even take on faith: It's something you can see with your own eyes (unless you believe all the videos you have ever seen of missiles and rockets are fake).

In the real world things that can be considered coincidences happen all the time. Sometimes even coincidences that are so unlikely that they are almost incredible. Of course most coincidences are actually much more likely to happen than we usually think.

Just as a random example, suppose that an asteroid makes a close encounter with the Earth, and the same day that this close encounter happens, a big earthquake happens somewhere on Earth. Coincidence? Well, that's actually very likely: Every year there are over a thousand earthquakes of magnitude 5 or higher on Earth. The likelihood that on a very specific day a significant earthquake happens is actually not that surprising. The two incidents may very well not be related at all, but just happened on the same day.

In conspiracy theory land, however, there are no such things as coincidences. Everything always happens for a reason, and everything is always related somehow.

For example, did some politician happen to cancel a flight scheduled on the same day as a terrorist attack involving airplanes happened? In conspiracy theory land that cannot be a coincidence. There must be a connection. (It doesn't matter that all kinds of politicians are traveling by plane all the time, and cancelling such flights is not at all uncommon, and hence some random politician cancelling a flight for the same day as the terrorist attack happens isn't a very unlikely happenstance. Except for conspiracy theorists, of course.)

Or how about the Pentagon having blast-proof windows on one of its walls, and a plane crashing precisely on that wall? Given that the Pentagon has 5 outer walls, the likelihood of this happening is roughly 20%, which isn't actually all that small. One in five isn't very unlikely, except of course in conspiracy fantasy land, where it cannot be a coincidence.

It's not impossible for even extremely unlikely coincidences to sometimes happen, but conspiracy theorists just love to take even the likeliest of coincidences and jump to conclusions. Just to add another piece of "evidence" for their shotgun argumentation.

Pareidolia is also not a logical fallacy per se, but more a fallacy of perception.

Pareidolia is, basically, the phenomenon which happens when we perceive recognizable patterns in randomness, even though the patterns really aren't there. For example, random blotches of paint might look like a face, or random noise might sound like a spoken word (or even a full sentence).

Pareidolia is a side effect of pattern recognition in our brain. Our visual and auditory perception is heavily based on pattern recognition. It's what helps us understanding spoken languages, even if it's spoken by different people with different voices, at different speeds and with different accents. It's what helps us recognizing objects even if they have a slightly different shape or coloring which we have never seen before. It's what helps us recognize people and differentiate them from each other. It's what helps us reading written text at amazing speeds by simply scanning the written lines visually (you are doing precisely that right now). In fact, we could probably not even survive without pattern recognition.

This pattern recognition is also heavily based on experience: We tend to recognize things like shapes and sounds when we have previous experience from similar shapes and sounds. Also the context helps us in this pattern recognition, often very significantly. When we recognize the context, we tend to expect certain things, which in turn helps us making the pattern recognition more easily and faster. For example, if you open a book, you already expect to see text inside, and you are already prepared to recognize it. In a context which is completely unrelated to written text (for a completely random example, if you are examining your fingernails) you are not expecting to see text, and thus you don't recognize it as easily.

Pareidolia happens when our brain recognizes, or thinks it recognizes, patterns where there may be only randomness, or in places which are not random per se, but completely unrelated to this purported "pattern".

As noted, pareidolia is greatly helped if we are expecting to see a certain pattern. This predisposes our brain to try to recognize that exact thing, making it easier.

This is the very idea in so-called backmasking: Playing a sound, for example a song, backwards and then recognizing something in the garbled sounds that result from this. When we are not expecting anything in particular, we usually only hear garbled noises. However, if someone tells us what we should expect, we immediately "recognize" it.

However, we are just fooling our own pattern recognition system into perceiving something which isn't really there. If someone else is told to expect a slightly similar-sounding, but different message, that other person is very probably going to hear that. You and that other person are both being mislead by playing with the pattern recognition capabilities of your brain.

Conspiracy theorists love abusing pareidolia. They will make people see patterns where there are none, and people will be fooled into believing that the patterns really are there, and thus are proof of something.

One thing conspiracy theorists really love to do is inspecting photographs of the events and trying to find "flaws" and signs of fakery.

The thing about photography is that it's very easy to misinterpret what the photo is showing. Details that were in the actual scene might get lost in the photo, making it look different than what it really was. Also, photos often suffer of artifacts that are caused by the physics of photography, making things appear in photos that weren't there in actuality (I have written an entire article on why photographic evidence is almost worthless precisely because of all the artifacts and loss of information that is inherent to photography.) The conspiracy theorists will often abuse this (intentionally or not) to make claims of fakery.

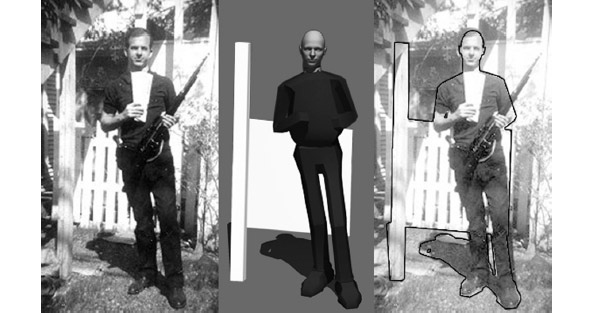

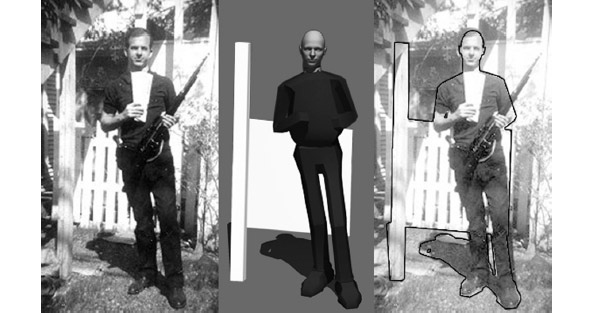

Take, for instance, this analysis of one of the photos related to the Kennedy assassination, apparently depicting an inconsistent shadow of Lee Harvey Oswald:

Pretty convincing, eh? Clearly something is going on here because the shadow is physically incorrect?

Well, no. The mistake that this analysis makes is to assume that the ground is completely flat and level. It isn't. The computer rendering is done assuming a completely flat ground. The differences in height of the original ground are mostly lost due to the poor quality of the photo, the ground being overexposed and details being lost. (Quite ironically, the irregularities in Oswald's shadow is not a sign of fakery, but on the contrary can be used to determine some of the original geometry of the ground that's not apparent directly in the photo.)

This is especially so because the image on the left above has been manipulated by the analyst to increase contrast. The original photograph looks like this:

Any unevenness of the ground is lost in the heavy contrast enhancement. Also notice how the shadow is actually blurry in the original image, while it's suspiciously sharp in the enhanced image.

(Also note how the computer model's head does not correspond perfectly to Oswald's.)

Another thing that these analyses typically fail to do is to explain how exactly the photo was allegedly "faked" in such way that it causes the alleged artifact.

By the way, reading the original Warren Commission Report on how they determined these photographs to be genuine is interesting and enlightening. I recommend doing that. They were not idiots.