The problem is this: If there's a base class A, and then class B inherits

from A, and class C inherits from A, and finally class D inherits from both

B and C, what should happen?

The problem is this: If there's a base class A, and then class B inherits

from A, and class C inherits from A, and finally class D inherits from both

B and C, what should happen?For some reason many developers have an irrational aversion towards multiple inheritance. I don't really understand why.

In object-oriented programming inheritance is usually described as an "is-a" relationship. In other words, a class of derived type "is a" class of the base type. This means that the base class represent a more abstract and generic concept, while the derived class represents a more concrete and specialized concept (ie. a specialized version of the more generic concept that the base class represents).

For example, in graphical user interface programming it's a very common pattern to have all visual elements being of type "Widget". In other words, Widget is a base class which represents any element which can appear on the screen.

The actual elements are then inherited from Widget. For example a Button could be directly inherited from Widget, as well as a Label could be inherited from Widget. This means that "Button is a Widget" and "Label is a Widget". "Widget" is a more generic and abstract concept while "Button" and "Label" are more specialized concrete versions of it.

What the "is-a" relationship means in practice is that wherever something expects an object of type Widget to be used, an object of type Button or Label can be used instead. For example if a function expects an object of type Widget as parameter, a Button or Label can be given to it.

However, a Button is not a Label because they have no direct relationship. Hence if something expects an object of type Button, an object of type Label cannot be given to it. There is no "is-a" relationship between them.

A class could be even more specialized. For example one could have a class "ToolbarButton" inherited from Button. This means that ToolbarButton is a special type of Button, but since it is a Button, wherever an object of the latter type is expected, an object of the former type can be given. (Naturally if a Widget is expected, an object of type ToolbarButton can also be given because ToolbarButton is also a Widget.)

Basically the only thing that multiple inheritance adds to regular inheritance, from an object-oriented point of view, is an addition to the "is-a" relationship: "and-a".

For example, suppose that you have a class of type EventListener which can be used to receive callback function calls on certain events (eg. when the user clicks the object with the mouse). Suppose you want your Button class to also be of type EventListener so that it can receive event callbacks.

Here's where multiple inheritance kicks in: You inherit Button from both Widget and EventListener.

Thus you are effectively saying: "A Button is a Widget and a EventListener."

It is thus of both latter types at the same time. Whenever an object of one of those latter types is expected, an object of type Button can be given. No more, no less. It's as simple as that.

In many systems, especially when using a programming language which doesn't support full-fledged multiple inheritance, the EventListener example base class above would be implemented as a so-called "interface" (or "protocol" in some languages) instead.

Many programmers use this as some kind of excuse to claim that this is not multiple inheritance. I have hard time understanding why they do that. Is multiple inheritance such a dreaded and frightful thing that they have to invent excuses in order to avoid admitting that they are actually using it? It's really irrational.

An interface is, basically, an abstract base class which cannot be instantiated all in itself, but has to always be inherited from in order to be instantiated. Also, usually, it's not possible to add any function implementations nor member variables to interfaces. Often interfaces can be inherited (even multiple-inherited) from other interfaces, though.

So interfaces allow a limited form of multiple inheritance, but from an object-oriented point of view this is still multiple inheritance, plain and simple. Wherever an object of the interface type is expected, any object which implements the interface can be given. Hence the interface acts as a base class type, and there's a clear "is-a" relationship between it and the class that implements it.

Nevertheless, many developers will go to great lengths to argue how it's not really multiple inheritance. I can't undrestand what they are so afraid of. Multiple inheritance is not that scary.

If function A expects an object of type X, and function B expects a distinct object of the Y (ie. there's no direct relationship between X and Y), and then there's an object of type Z which can be given to both A and B, that means, by definition, that Z has been multiple-inherited from X and Y ("Z is a X and a Y"). Plain and simple.

This is the case even if either X or Y is an "interface", hence it changes nothing. If you are implementing interfaces, you are using multiple inheritance. Get over it.

The real reason why developers are so afraid of multiple inheritance is the so-called "diamond inheritance" situation. (As if multiple inheritance somehow automatically implied diamond inheritance. That's not the case, of course. Most, if not all, object-oriented programming languages support multiple inheritance and do not necessarily have to face the diamond inheritance problem by limiting the multiple inheritance somehow, eg. by restricting it to so-called "interfaces".)

Even in programming languages which have full-fledged multiple inheritance support without limitations, and where the diamond inheritance situation can happen, it's not such a scary thing either. It's just a question of design choice what to do about it. In most cases you can simply avoid it (but still get the advantages of not being limited to the crippled "interface" solution).

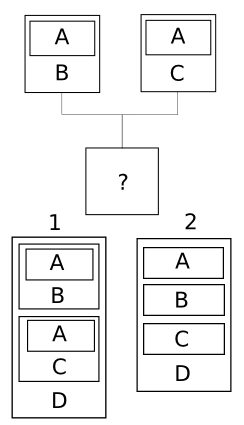

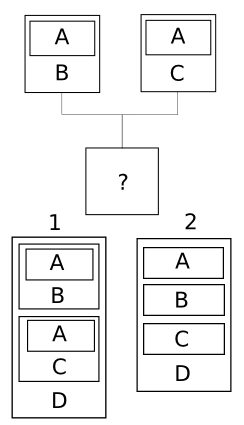

The problem is this: If there's a base class A, and then class B inherits

from A, and class C inherits from A, and finally class D inherits from both

B and C, what should happen?

The problem is this: If there's a base class A, and then class B inherits

from A, and class C inherits from A, and finally class D inherits from both

B and C, what should happen?

The graph on the right visualizes the two possibilities (1 and 2). In other words, should A be duplicated inside D, or should it be merged so that both B and C use the same A (rather than having their own separate instances of it). For instance, if A has some member variable, should this member variable be instantiated twice inside D (one for the B part of the object and another for the C part), or only once (and have both B and C share that one variable)?

The question becomes important in many situations. For example, if some function expects an object of type B and an object of type D is given to it, and this function calls some methods of this object which modifies the member variable inside A, should the modified variable be "local" to B, or should it be a variable which is shared by both B and C (in other words, should the methods of C see the change?)

There may be situations where one wants the B and C parts inside D to be completely independent and not share anything (so that they act as if they were separate objects), while in some situations what one want is for the A part to appear only once inside D and for B and C to share it (so that if one of them modifies something inside A, the other sees the modifications).

(Solution 1, where the common base is not shared, is easier for a compiler to implement, while solution 2 where it is shared, is quite a lot harder. However, this is just a compiler-technical issue and the programmer doesn't really have to worry about that, even though some developers make it sound like a really big deal. It isn't.)

Ultimately it's just a question of design: Which one do you want? For example C++ offers the programmer both choices, so if the programmer really needs a diamond inheritance solution, he can choose how it will behave. (The compiler will take care of the difficulties behind the scenes.)

Granted, in many situations it might be better to try to re-design the program so that diamond inheritance inheritance is not needed. However, it's really not something to be scared of. If it's the best solution for a problem, it makes little sense to try to artificially avoid it.

It makes even less sense to diss multiple inheritance in general simply because of the diamond inheritance problem. Multiple inheritance doesn't automatically imply diamond inheritance, and the former can be extremely useful even without the latter (which is why most object-oriented languages offer it in one way or another, even if it has to be limited to avoid the diamond inheritance situation).